This episode is a continuation of Q&A #1 Part 1 where I answer YOUR questions!

00:00 Introduction

01:20 Planning for a good outcome?

03:10 Stock Picking Advice

08:42 Dumbing It Down for Dr. Phil

11:52 Will AI Shorten Attention Spans?

12:55 Historical Nerd Life

14:41 YouTube vs. Podcast Metrics

16:30 Video Games

26:04 Creativity

30:29 Does AI Doom Explain the Fermi Paradox?

36:37 Grabby Aliens

37:29 Types of AI Doomers

44:44 Early Warning Signs of AI Doom

48:34 Do Current AIs Have General Intelligence?

51:07 How Liron Uses AI

53:41 Is “Doomer” a Good Term?

57:11 Liron’s Favorite Books

01:05:21 Effective Altruism

01:06:36 The Doom Debates Community

Show Notes

PauseAI Discord: https://discord.gg/2XXWXvErfA

Robin Hanson’s Grabby Aliens theory: https://grabbyaliens.com

Prof. David Kipping’s response to Robin Hanson’s Grabby Aliens: https://www.youtube.com/watch?v=tR1HTNtcYw0

My explanation of “AI completeness”, but actually I made a mistake because the term I previously coined is “goal completeness”: https://www.lesswrong.com/posts/iFdnb8FGRF4fquWnc/goal-completeness-is-like-turing-completeness-for-agi

^ Goal-Completeness (and the corresponding Shapira-Yudkowsky Thesis) might be my best/only original contribution to AI safety research, albeit a small one. Max Tegmark even retweeted it.

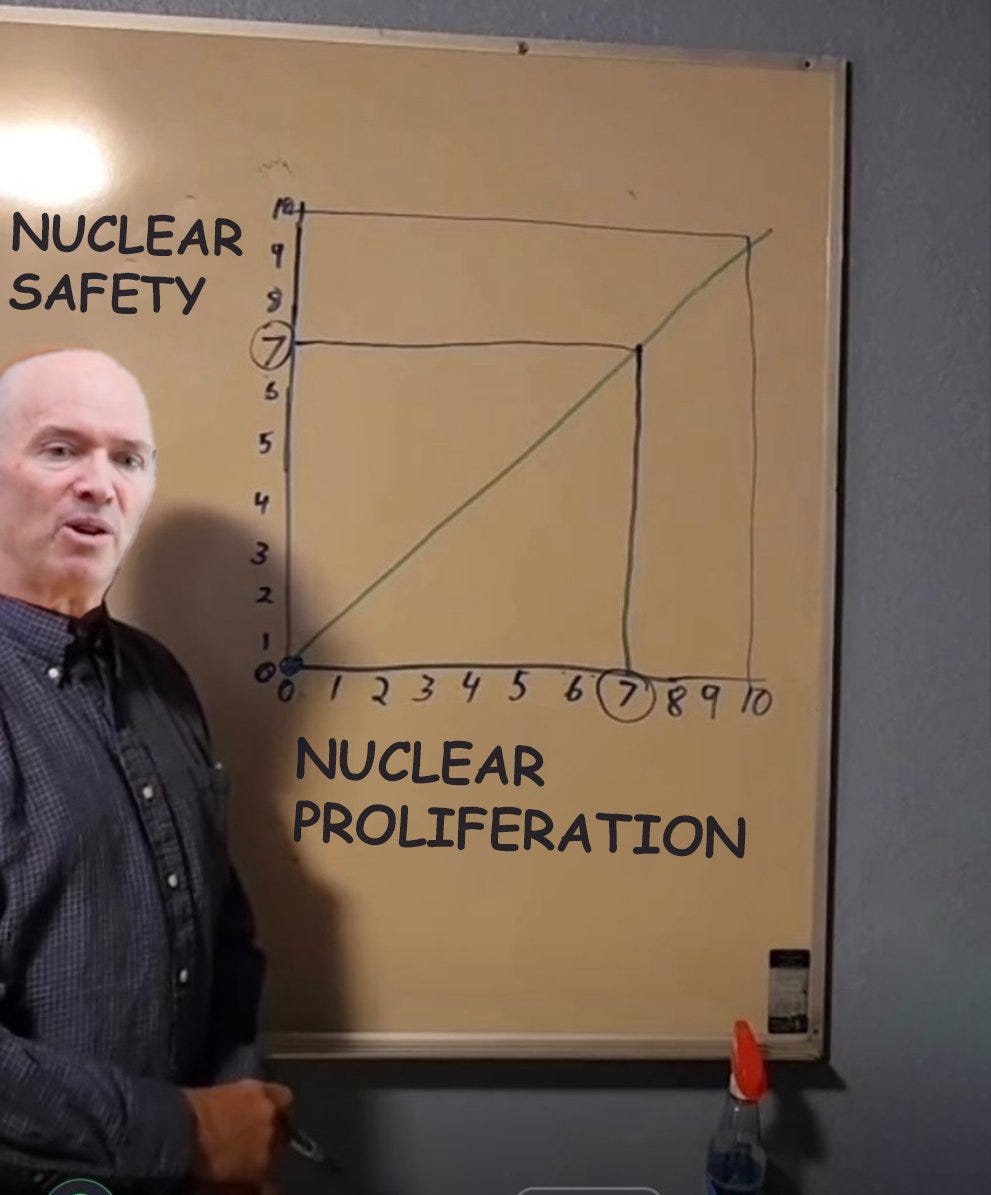

a16z’s Ben Horowitz claiming nuclear proliferation is good, actually: https://x.com/liron/status/1690087501548126209

Doom Debates’ Mission is to raise mainstream awareness of imminent extinction from AGI and build the social infrastructure for high-quality debate.

Support the mission by subscribing to my Substack at DoomDebates.com and to youtube.com/@DoomDebates. Thanks for watching.