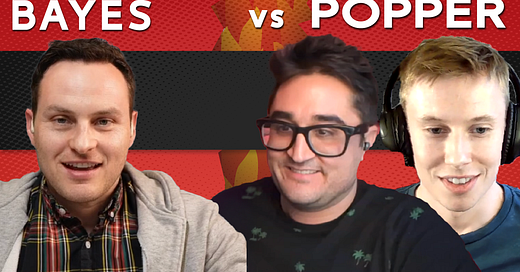

Vaden Masrani and Ben Chugg, hosts of the Increments Podcast, are joining me to debate Bayesian vs. Popperian epistemology.

I’m on the Bayesian side, heavily influenced by the writings of Eliezer Yudkowsky. Vaden and Ben are on the Popperian side, heavily influenced by David Deutsch and the writings of Popper himself.

We dive into the theoretical underpinnings of Bayesian reasoning and Solomonoff induction, contrasting them with the Popperian perspective, and explore real-world applications such as predicting elections and economic policy outcomes.

The debate highlights key philosophical differences between our two epistemological frameworks, and sets the stage for further discussions on superintelligence and AI doom scenarios in an upcoming Part II.

00:00 Introducing Vaden and Ben

02:51 Setting the Stage: Epistemology and AI Doom

04:50 What’s Your P(Doom)™

13:29 Popperian vs. Bayesian Epistemology

31:09 Engineering and Hypotheses

38:01 Solomonoff Induction

45:21 Analogy to Mathematical Proofs

48:42 Popperian Reasoning and Explanations

54:35 Arguments Against Bayesianism

58:33 Against Probability Assignments

01:21:49 Popper’s Definition of “Content”

01:31:22 Heliocentric Theory Example

01:31:34 “Hard to Vary” Explanations

01:44:42 Coin Flipping Example

01:57:37 Expected Value

02:12:14 Prediction Market Calibration

02:19:07 Futarchy

02:29:14 Prediction Markets as AI Lower Bound

02:39:07 A Test for Prediction Markets

2:45:54 Closing Thoughts

Show Notes

Vaden & Ben’s Podcast: https://www.youtube.com/@incrementspod

Vaden’s Twitter: https://x.com/vadenmasrani

Ben’s Twitter: https://x.com/BennyChugg

Bayesian reasoning: https://en.wikipedia.org/wiki/Bayesian_inference

Karl Popper: https://en.wikipedia.org/wiki/Karl_Popper

Vaden's blog post on Cox's Theorem and Yudkowsky's claims of "Laws of Rationality": https://vmasrani.github.io/blog/2021/the_credence_assumption/

Vaden’s disproof of probabilistic induction (including Solomonoff Induction): https://arxiv.org/abs/2107.00749

Vaden’s referenced post about predictions being uncalibrated > 1yr out: https://forum.effectivealtruism.org/posts/hqkyaHLQhzuREcXSX/data-on-forecasting-accuracy-across-different-time-horizons#Calibrations

Article by Gavin Leech and Misha Yagudin on the reliability of forecasters: https://ifp.org/can-policymakers-trust-forecasters/

Sources for claim that superforecasters gave a P(doom) below 1%: https://80000hours.org/2024/09/why-experts-and-forecasters-disagree-about-ai-risk/

https://www.astralcodexten.com/p/the-extinction-tournament

Vaden’s Slides on Content vs Probability: https://vmasrani.github.io/assets/pdf/popper_good.pdf

Doom Debates’ Mission is to raise mainstream awareness of imminent extinction from AGI and build the social infrastructure for high-quality debate.

Support the mission by subscribing to my Substack at DoomDebates.com and to youtube.com/@DoomDebates. Thanks for watching.

Share this post